Introduction

In my thesis, I combined well established robust estimators such as RANSAC with deep learning technqiues in order to find multiple geometric models within 2D or 3D observations.

During my time as a PhD student, I have published multiple papers (CVPR, T-PAMI, AAAI, ICRA, GCPR) presenting novel Computer Vision algorithms as well as new datasets. I have also served as a reviewer for conferences and journals including CVPR, ICCV, ECCV, T-PAMI and GCPR.

In 2022, I spent four months as a Research Scientist Intern at Meta Reality Labs London, UK.

Previously, I studied Electrical Engineering and Information Technology (BSc and MSc) at Leibniz University Hannover, Germany, spent a semester abroad at Yonsei University in Seoul, and visited Sony R&D in Tokyo for an internship.

Feel free to also check out my LinkedIn, Google Scholar and GitHub profiles.

Publications

PARSAC: Accelerating Robust Multi-Model Fitting with Parallel Sample Consensus

Florian Kluger, Bodo Rosenhahn

AAAI 2024

Paper , Code , Poster

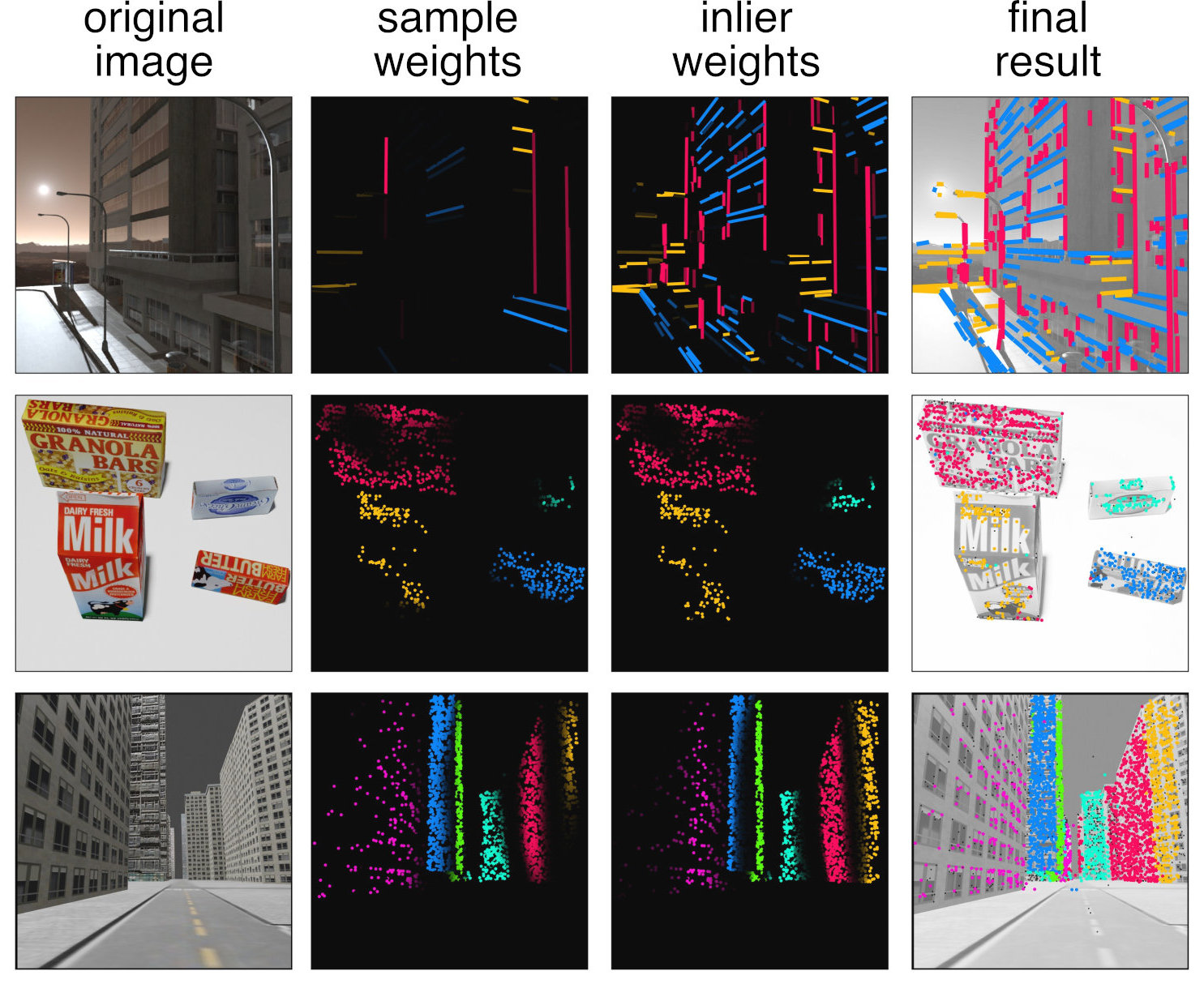

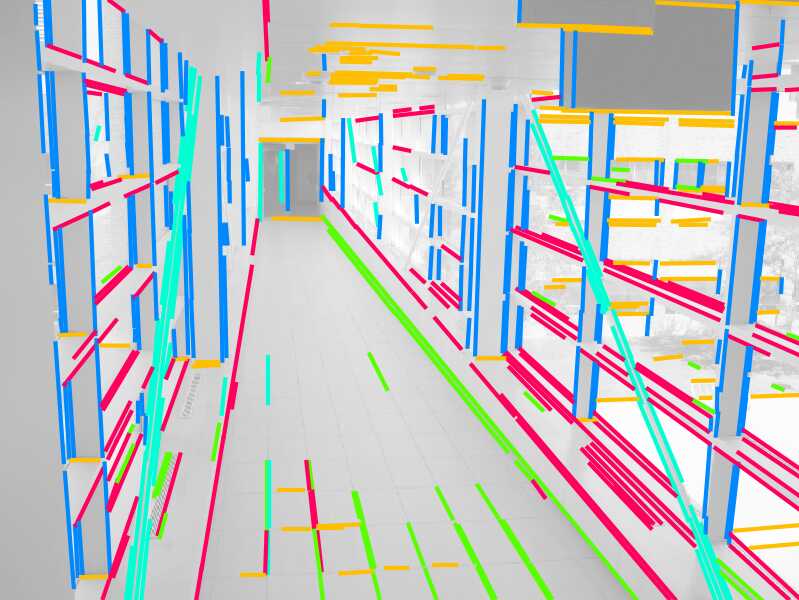

We present a learning-based real-time method for robust estimation of multiple instances of geometric models from noisy data. Our method detects all model instances independently and in parallel. We demonstrate state-of-the-art performance on multiple datasets, with inference times as small as five milliseconds per image.

Robust Shape Fitting for 3D Scene Abstraction

Florian Kluger, Eric Brachmann, Michael Ying Yang, Bodo Rosenhahn

T-PAMI 2024

Paper , Code

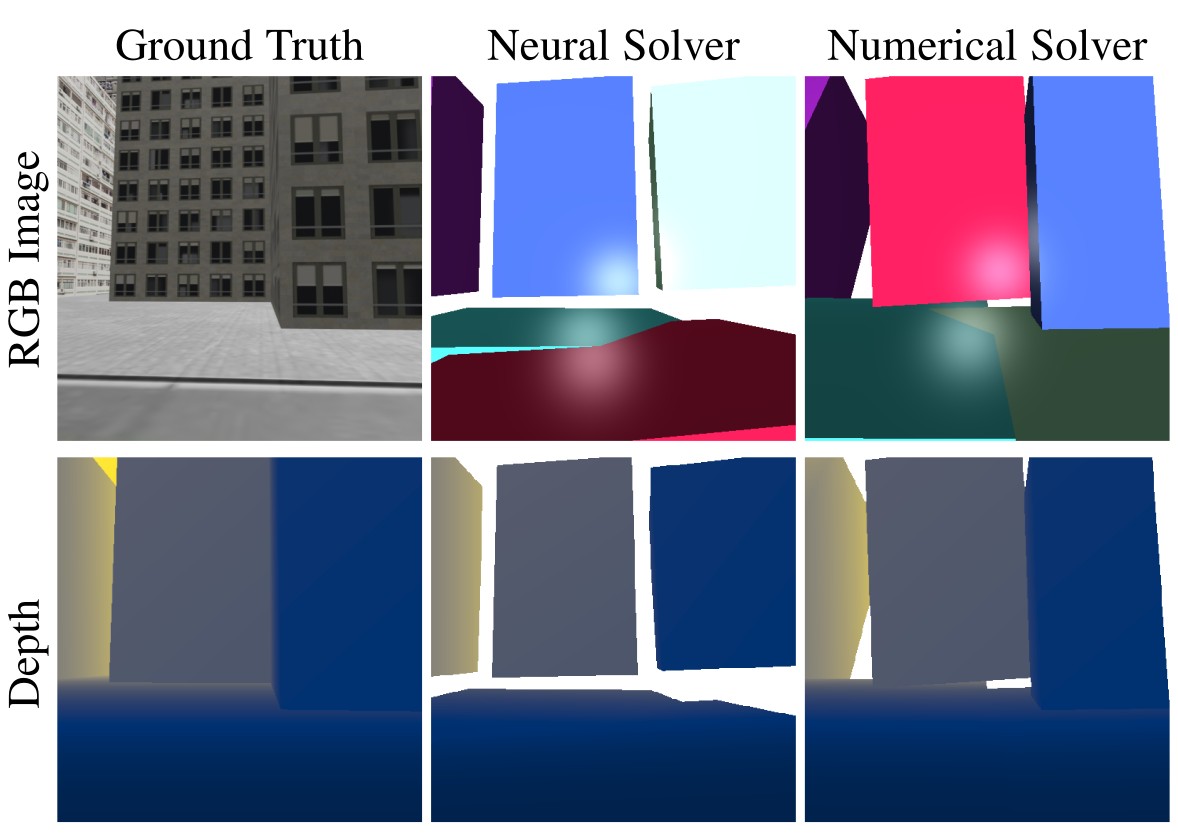

Extending our previous work Cuboids Revisited (CVPR 2021), we present improvements of our 3D primitive fitting approach, including a novel Transformer-based cuboid estimator, as well as more comprehensive empirical analyses.

CONSAC: Robust Multi-Model Fitting by Conditional Sample Consensus

Florian Kluger, Eric Brachmann, Hanno Ackermann, Carsten Rother, Michael Ying Yang, Bodo Rosenhahn

CVPR 2020

Paper , Code , Poster , Video

We present the first learning-based method for robust multi-model fitting and achieve state-of-the-art for homography and vanishing point estimation.

Temporally Consistent Horizon Lines

Florian Kluger, Hanno Ackermann, Michael Ying Yang, Bodo Rosenhahn

ICRA 2020

Paper , Code

We present a novel CNN architecture with an improved residual convolutional LSTM for temporally consistent horizon line estimation, which consistently achieves superior performance compared with existing methods.

Region-based Cycle-Consistent Data Augmentation for Object Detection

Florian Kluger, Christoph Reinders, Kevin Raetz, Philipp Schelske, Bastian Wandt, Hanno Ackermann, Bodo Rosenhahn

IEEE Big Data Workshops 2018

Paper , Code

Winner of the Special Price for proposing innovative ideas and contributing to the Road Damage Detection and Classification Workshop at IEEE Big Data 2018.

Deep Learning for Vanishing Point Detection Using an Inverse Gnomonic Projection

Florian Kluger, Hanno Ackermann, Michael Ying Yang, Bodo Rosenhahn

GCPR 2017

Paper , Code

We present a novel CNN-based approach for vanishing point detection from uncalibrated monocular images, which achieves competitive performance on three horizon estimation benchmark datasets.

Datasets

SMH: Synthetic Metropolis Homographies

github.com/fkluger/smh

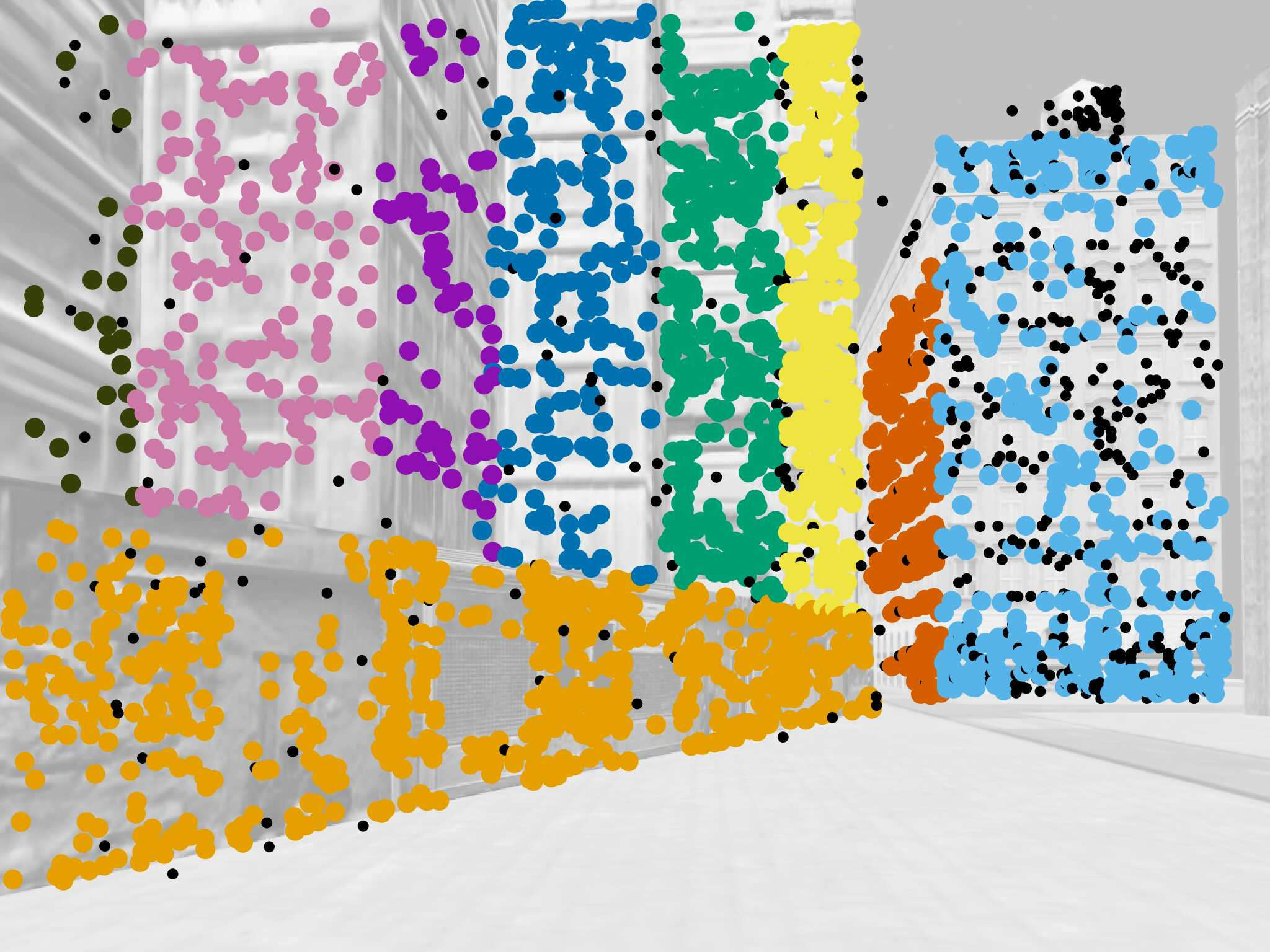

SMH is a synthetic dataset for multiple homography fitting based on a 3D model of a city. A total of 48002 image pairs are rendered along trajectories within the 3D model. We provide ground truth homographies for the visible planes in each image pair, as well as pre-computed SIFT features with ground truth labels.

HOPE-F

github.com/fkluger/hope-f

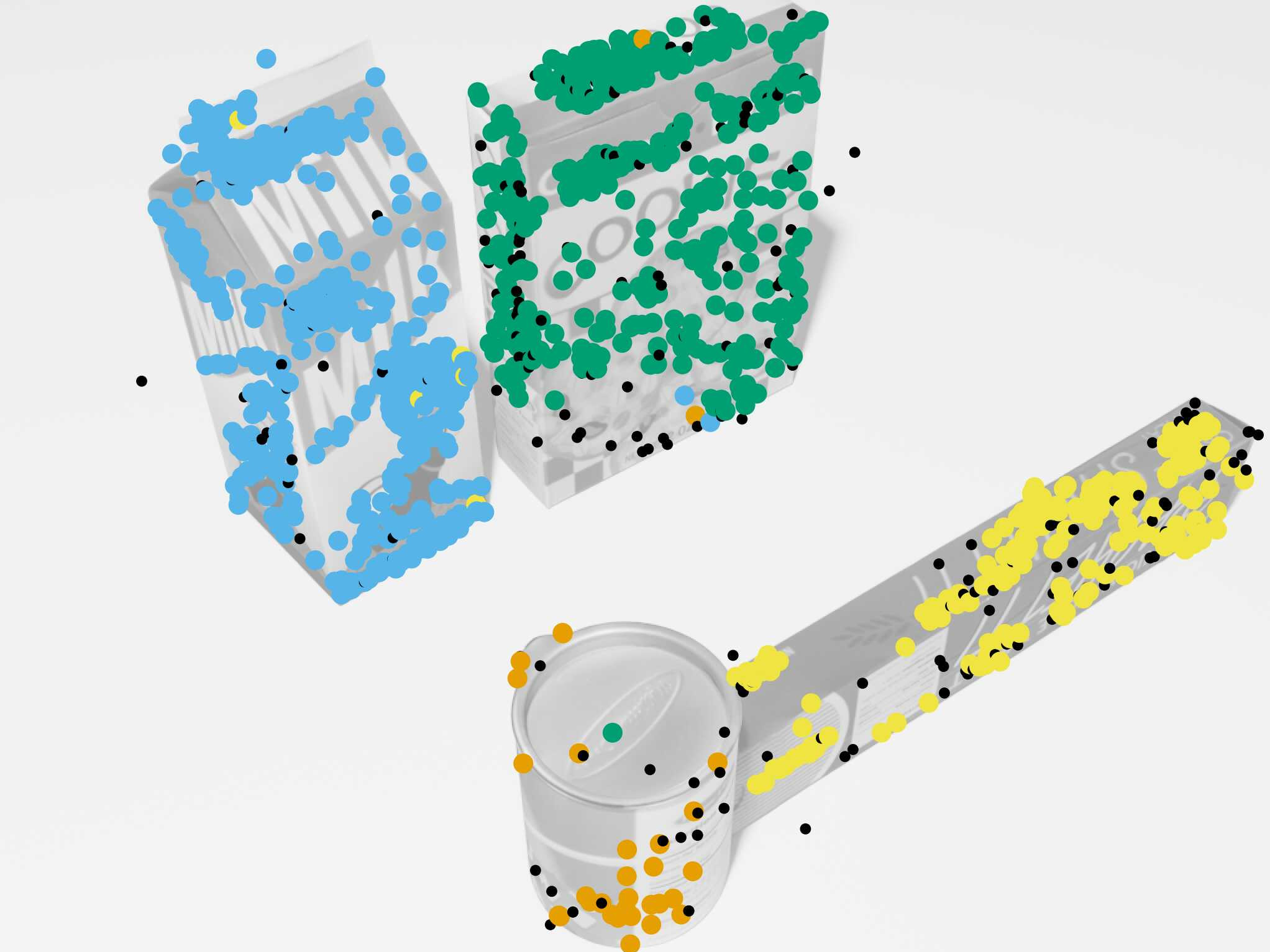

HOPE-F is a synthetic dataset for multiple fundamental matrix fitting containing 4000 image pairs. We use a subset of 22 textured 3D meshes from the HOPE dataset to render image pairs showing between one and four objects. HOPE-F provides ground truth fundamental matrices for each object and pre-computed SIFT keypoint features with ground truth labels.

NYU-VP

github.com/fkluger/nyu_vp

NYU-VP is the first large-scale vanishing point dataset suitable for deep learning. It is based on the NYU Depth v2 dataset and contains 1449 images of indoor scenes. We manually labelled up to eight vanishing points per image and reserved 225 images for testing.

YUD+

github.com/fkluger/yud_plus

YUD+ is an extension of the York Urban Database (YUD) which contains 102 images. While YUD has three Manhattan vanishing points labelled in each image, YUD+ provides up to five additional vanishing points per image.

KITTI Horizon

github.com/fkluger/kitti_horizon

KITTI Horizon provides ground truth labels for the geometrical horizon line for 72 image sequences of the KITTI dataset consisting of 43699 frames in total.